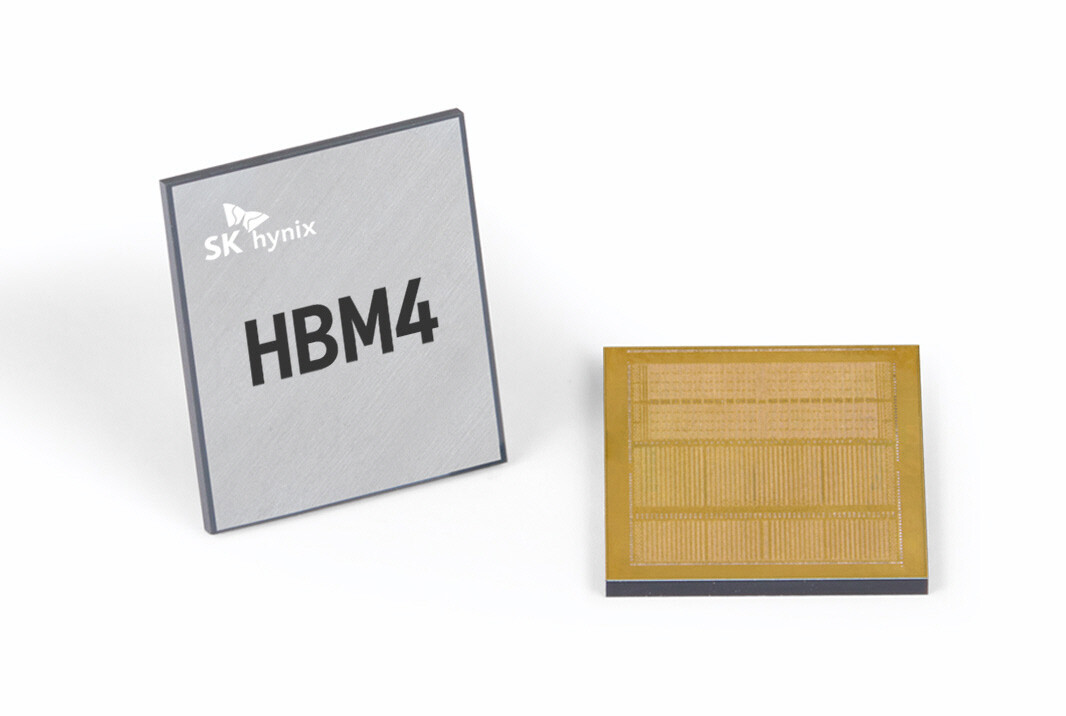

SK Hynix announced on the 19th that it has become the first in the industry to supply samples of its sixth-generation high-bandwidth memory (HBM4) 12-stack, an ultra-high-performance DRAM designed for artificial intelligence (AI), to key customers. With the current market dominated by fifth-generation HBM3E, this move is expected to ignite fierce competition in the next-generation HBM4 space.

HBM is a high-value product that vertically stacks multiple DRAM chips, dramatically boosting data processing speeds. SK Hynix has taken a leading position in the market, outpacing competitors like Samsung Electronics and U.S.-based Micron Technology.

Industry speculation points to Nvidia and Broadcom as likely recipients of the HBM4 samples. Nvidia’s flagship AI chip, Blackwell, currently uses HBM3E, while its next-generation Rubin chip, slated for release next year, is expected to incorporate HBM4. SK Hynix claims its HBM4 12-stack product offers world-class speed and capacity, achieving a bandwidth exceeding 2 terabytes per second (TB/s) for the first time. Bandwidth, in the context of HBM, refers to the total data capacity a single HBM package can process per second.

The company highlighted the product’s capabilities, stating, “It can process data equivalent to over 400 full-HD movies, each 5 gigabytes (GB) in size, in just one second.” This represents a speed increase of more than 60% compared to the previous generation, HBM3E.

SK Hynix also applied its proven “Advanced MR-MUF” process, previously successful in earlier generations, to achieve a record-breaking 36GB capacity for a 12-stack HBM product. This process minimizes chip warping, enhances heat dissipation, and maximizes product stability. “We’ve delivered the HBM4 12-stack samples ahead of schedule and have begun the certification process with our customers,” the company said.

Last year, SK Hynix became the first in the industry to mass-produce fifth-generation HBM3E in 8-stack and 12-stack configurations, supplying them to customers like Nvidia. The company plans to complete preparations for HBM4 12-stack mass production in the second half of this year, with shipments to begin based on customer timelines.

Meanwhile, Samsung Electronics aims to supply HBM3E to Nvidia in the first half of this year and start HBM4 mass production in the second half. Micron Technology, currently delivering HBM3E 8-stack products to Nvidia, is preparing to roll out 12-stack versions, with HBM4 mass production targeted for next year.

SK Hynix will showcase its advancements at Nvidia’s AI conference, “GTC 2025,” held in San Jose, California, through March 21 (local time). Under the theme “The Future of AI Powered by Memory,” the company will operate a booth featuring a mock-up of the HBM4 12-stack and “Compute Express Link (CXL),” a low-power DRAM-based memory module tailored for AI servers. CXL, a new standard gaining attention, is being championed by Nvidia.

As the AI boom drives demand for cutting-edge memory solutions, SK Hynix’s early HBM4 rollout positions it as a frontrunner in the escalating race to power the next wave of AI innovation.

[Copyright (c) Global Economic Times. All Rights Reserved.]